library(tidyverse)

library(tidymodels)

set.seed(123)AE 17: Traffic fines and electoral politics - regression with multiple predictors

Suggested answers

In this application exercise we will replicate part of the analysis from Playing politics with traffic fines: Sheriff elections and political cycles in traffic fines revenue.1 The abstract of the article states:

1 Su, Min, and Christian Buerger. 2025. “Playing politics with traffic fines: Sheriff elections and political cycles in traffic fines revenue.” American Journal of Political Science 69: 164–175. https://doi.org/10.1111/ajps.12866

The political budget cycle theory has extensively documented how politicians manipulate policies during election years to gain an electoral advantage. This paper focuses on county sheriffs, crucial but often neglected local officials, and investigates their opportunistic political behavior during elections. Using a panel data set covering 57 California county governments over four election cycles, we find compelling evidence of traffic enforcement policy manipulation by county sheriffs during election years. Specifically, a county’s per capita traffic fines revenue is 9% lower in the election than in nonelection years. The magnitude of the political cycle intensifies when an election is competitive. Our findings contribute to the political budget cycle theory and provide timely insights into the ongoing debate surrounding law enforcement reform and local governments’ increasing reliance on fines and fees revenue.

We will use {tidyverse} and {tidymodels} for data exploration and modeling, respectively.

The replication data file can be found in data/traffic_fines.csv. Let’s load the data and take a look at the first few rows.2

2 The codebook is available from Dataverse. The data set has been lightly cleaned for the application exercise.

traffic_fines <- read_csv("data/traffic_fines.csv")Rows: 1025 Columns: 95

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

chr (2): county_name, elec_dummy

dbl (93): year, county_code, vehicle_code_fines, vehicle_code_fines_i_p, she...

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.glimpse(traffic_fines)Rows: 1,025

Columns: 95

$ year <dbl> 2003, 2004, 2005, 2006, 2007, 2008, 2009, 2010,…

$ county_code <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

$ county_name <chr> "Alameda", "Alameda", "Alameda", "Alameda", "Al…

$ vehicle_code_fines <dbl> 4973766, 5701454, 4645572, 5256212, 5498544, 61…

$ vehicle_code_fines_i_p <dbl> 3.390001297, 3.789429426, 2.998037100, 3.274991…

$ elec_dummy <chr> "No", "No", "No", "Yes", "No", "No", "No", "Yes…

$ sheriff_incumb <dbl> NA, NA, NA, 0, NA, NA, NA, 1, NA, NA, NA, 1, NA…

$ pre_elec <dbl> 3, 2, 1, 0, 3, 2, 1, 0, 3, 2, 1, 0, 3, 2, 1, 0,…

$ no_incumb <dbl> 0, 0, 0, 1, 0, 0, 0, NA, 0, 0, 0, NA, 0, 0, 0, …

$ incumb <dbl> 0, 0, 0, NA, 0, 0, 0, 1, 0, 0, 0, 1, 0, 0, 0, 1…

$ sheriff_margin <dbl> NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA, NA,…

$ rep_share <dbl> 24.12914, 23.34603, 23.34603, 23.34603, 23.3460…

$ dem_share <dbl> 69.36355, 75.36176, 75.36176, 75.36176, 75.3617…

$ otherparty_share <dbl> 6.507315, 1.292216, 1.292216, 1.292216, 1.29221…

$ white_share <dbl> 38.81055, 38.18618, 37.55138, 36.97749, 36.3921…

$ asian_share <dbl> 22.9700470, 23.4097118, 23.8579330, 24.2536736,…

$ black_share <dbl> 13.79221725, 13.57556534, 13.35229397, 13.18081…

$ hispanic_share <dbl> 20.249336, 20.555365, 20.875692, 21.132017, 21.…

$ other_share <dbl> 4.177846, 4.273178, 4.362700, 4.455998, 4.54087…

$ young_drivers <dbl> 13.747941, 13.883005, 14.037847, 14.106328, 14.…

$ density <dbl> 1778.408447, 1776.412109, 1769.553955, 1775.562…

$ areain_square_miles <dbl> 825, 825, 825, 825, 825, 825, 825, 825, 825, 82…

$ med_inc <dbl> 56225, 57659, 60937, 64285, 68263, 70217, 68258…

$ unemp <dbl> 6.9, 5.9, 5.1, 4.4, 4.7, 6.2, 10.3, 10.9, 10.1,…

$ own_source_share <dbl> 41.07184, 36.20716, 41.50222, 42.68028, 45.6660…

$ emp_goods <dbl> 117444, 119457, 119701, 120023, 117638, 113131,…

$ emp_service <dbl> 450019, 440813, 445607, 453242, 459652, 466141,…

$ pay_goods_i <dbl> 58498.00, 59739.12, 57935.97, 59750.71, 59243.0…

$ pay_service_i <dbl> 44602.00, 45911.36, 46548.32, 47463.97, 48513.6…

$ arte_share <dbl> 1.5740343, 1.6364014, 1.6177933, 1.6885616, 1.6…

$ collect_share <dbl> 2.1427305, 2.1672187, 2.1728802, 2.1599128, 2.1…

$ cnty_le_sworn_1000p <dbl> 2.063813, 1.977428, 2.022766, 1.987249, 2.01029…

$ felony_tot_1000p <dbl> 14.047289, 13.687105, 13.538766, 12.706516, 13.…

$ misdemeanor_tot_1000p <dbl> 29.27370, 28.31380, 25.42603, 24.42999, 25.4998…

$ forfeitures_i_p <dbl> 6.1655664, 3.4768946, 6.1081676, 1.0144602, 1.3…

$ other_court_fines_i_p <dbl> 1.55856478, 0.08475058, 2.31785750, 1.49219465,…

$ delinquent_fines_i_p <dbl> 1.4636116, 0.7356105, 0.7866636, 0.8906603, 0.8…

$ i_trend_1 <dbl> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, …

$ i_trend_2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_3 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_4 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_5 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_6 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_7 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_8 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_9 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_10 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_11 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_12 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_13 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_14 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_15 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_16 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_17 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_18 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_19 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_20 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_21 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_22 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_23 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_24 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_25 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_26 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_27 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_28 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_29 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_30 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_31 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_32 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_33 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_34 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_35 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_36 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_37 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_38 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_39 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_40 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_41 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_42 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_43 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_44 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_45 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_46 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_47 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_48 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_49 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_50 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_51 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_52 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_53 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_54 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_55 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_56 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ i_trend_57 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

$ vic_margin <dbl> NA, NA, NA, 0, NA, NA, NA, 0, NA, NA, NA, 0, NA…Review: why was the original mdoel bad?

Recall the question we sought to answer last class: do politicians manipulate government policy during electoral years in an effort to gain an electoral advantage? We used a simple linear regression model to evaluate the hypotheses:

-

Null hypothesis: There is no linear relationship between whether or not it is an election year and per capita traffic fines revenue.

\[H_0: \beta_1 = 0\]

-

Alternative hypothesis: There is some linear relationship between whether or not it is an election year and per capita traffic fines revenue.

\[H_A: \beta_1 \neq 0\]

# fit the model

fines_elec_dummy_fit <- linear_reg() |>

fit(vehicle_code_fines_i_p ~ elec_dummy, data = traffic_fines)

tidy(fines_elec_dummy_fit)# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 3.90 0.256 15.2 2.84e-47

2 elec_dummyYes 0.0171 0.543 0.0315 9.75e- 1# evaluate the hypotheses

# calculate observed fit

obs_fit <- traffic_fines |>

specify(vehicle_code_fines_i_p ~ elec_dummy) |>

fit()

# generate permuted null distribution

null_dist <- traffic_fines |>

specify(vehicle_code_fines_i_p ~ elec_dummy) |>

hypothesize(null = "independence") |>

generate(reps = 1000, type = "permute") |>

fit()

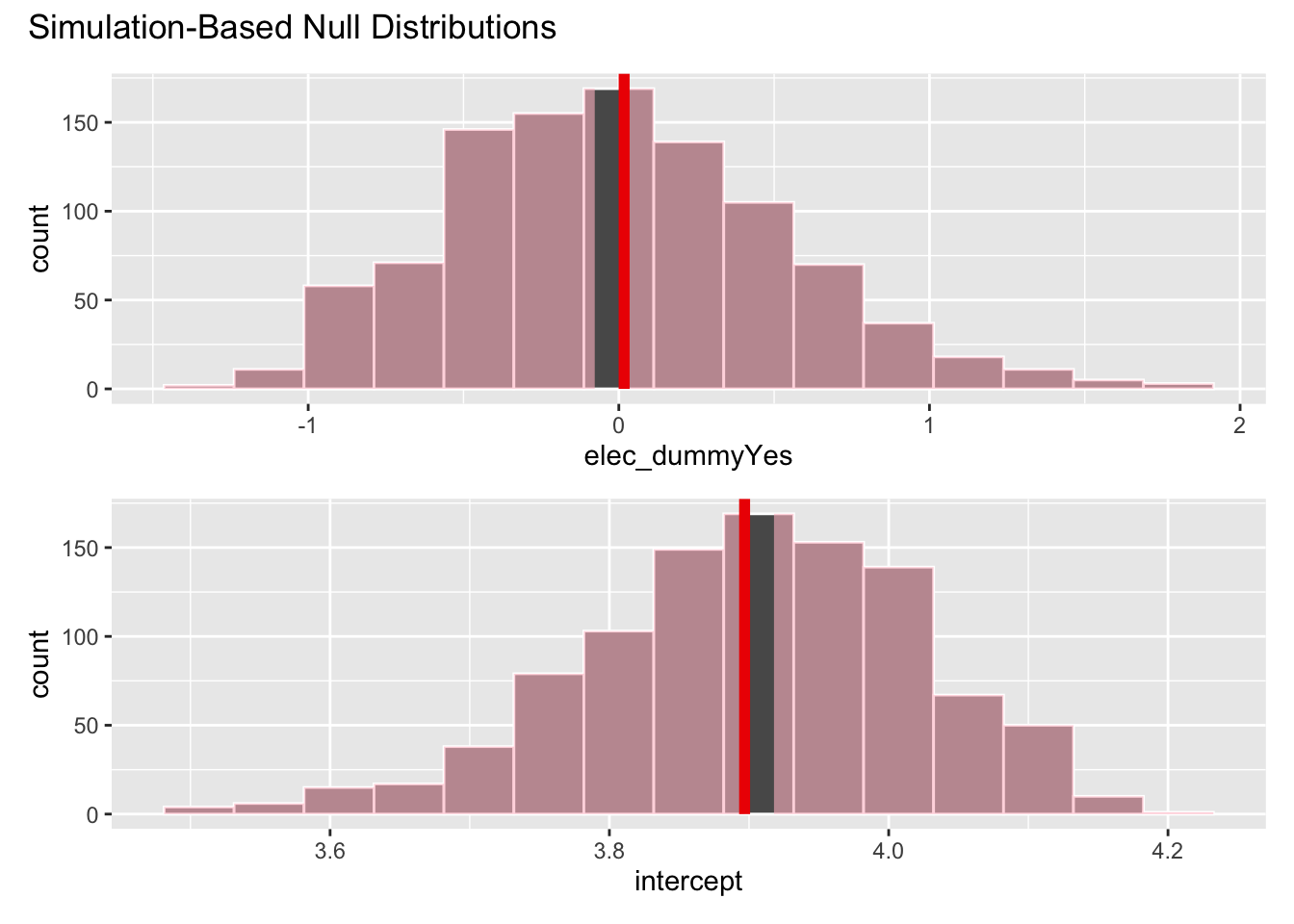

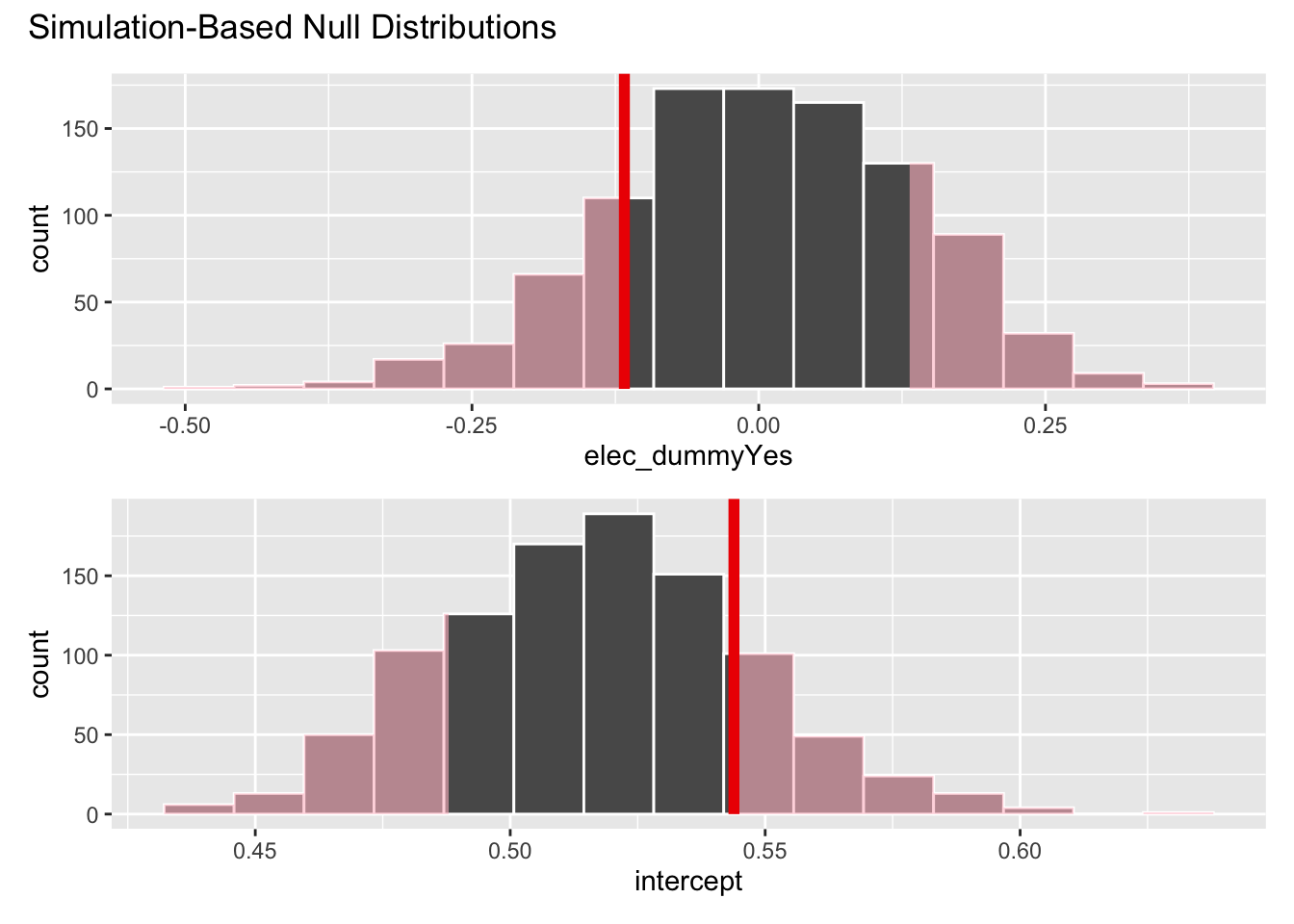

# visualize and calculate p-value

visualize(null_dist) +

shade_p_value(obs_fit, direction = "both")get_p_value(null_dist, obs_fit, direction = "both")# A tibble: 2 × 2

term p_value

<chr> <dbl>

1 elec_dummyYes 0.928

2 intercept 0.928When we checked the linearity assumptions for this model, we realized the model violated several of these assumptions, casting in doubt our original results. Today we will build a series of more robust models to better evaluate the hypotheses.

Estimate a multiple variables model

One possible problem with our model yesterday was omitted variable bias. Perhaps we omitted a crucial variable that explains the differences in per capita traffic fine revenue. Since median household income seemed to be predictive of the outcome, let’s estimate a model that includes both whether or not it is an election year and median household income.

Demo: Estimate a model that includes both elec_dummy and med_inc.

fines_inc_elec_fit <- linear_reg() |>

fit(vehicle_code_fines_i_p ~ med_inc + elec_dummy, data = traffic_fines)

tidy(fines_inc_elec_fit)# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 6.93 0.770 8.99 1.16e-18

2 med_inc -0.0000546 0.0000131 -4.17 3.37e- 5

3 elec_dummyYes 0.0400 0.539 0.0743 9.41e- 1Your turn: Interpret the coefficients of the model above.

-

Intercept: Add response here.

Counties not in an election year and with median household income of $0 are expected to earn, on average, $6.93 per capita in traffic fines revenue.

-

Coefficient for median household income:

All else held constant, for each $1,000 increase in median household income, per capita traffic fines revenue is expected to decrease by $\(0.055\).

-

Coefficient for whether or not it is an election year:

All else held constant, counties in an election year are expected to earn, on average, $0.04 more per capita in traffic fines revenue than counties not in an election year.

Hypothesis test

Demo: Use permutation-based methods to conduct the hypothesis test.

-

Median household income

-

Null hypothesis: There is no linear relationship between median household income and per capita traffic fines revenue.

\[H_0: \beta_1 = 0\]

-

Alternative hypothesis: There is some linear relationship between median household income and per capita traffic fines revenue.

\[H_A: \beta_1 \neq 0\]

-

-

Whether or not it is an election year

-

Null hypothesis: There is no linear relationship between whether or not it is an election year and per capita traffic fines revenue.

\[H_0: \beta_2 = 0\]

-

Alternative hypothesis: There is some linear relationship between whether or not it is an election year and per capita traffic fines revenue.

\[H_A: \beta_2 \neq 0\]

-

# calculate observed fit

obs_fit <- traffic_fines |>

specify(vehicle_code_fines_i_p ~ med_inc + elec_dummy) |>

fit()

# generate permuted null distribution

null_dist <- traffic_fines |>

specify(vehicle_code_fines_i_p ~ med_inc + elec_dummy) |>

hypothesize(null = "independence") |>

generate(reps = 1000, type = "permute") |>

fit()

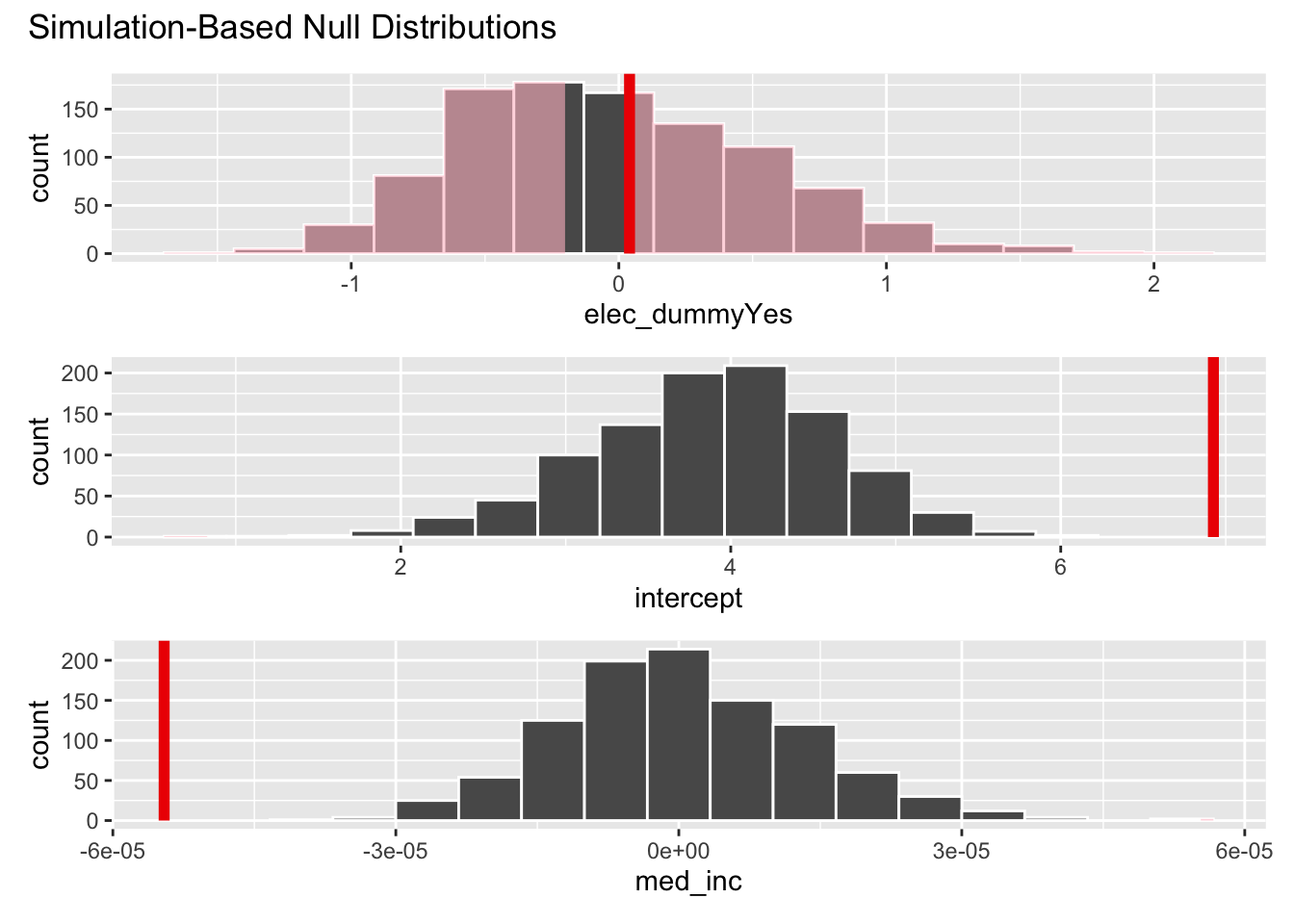

# visualize and calculate p-value

visualize(null_dist) +

shade_p_value(obs_fit, direction = "both")get_p_value(null_dist, obs_fit, direction = "both")Warning: Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.# A tibble: 3 × 2

term p_value

<chr> <dbl>

1 elec_dummyYes 0.842

2 intercept 0

3 med_inc 0 Your turn: Interpret the results of the hypothesis test. Use a significance level of 5%.

- With respect to median household income, the probability of observing a relationship as strong as the one in the data if the null hypothesis is true is less than \(0.001\). Since this is less than 0.05, we reject the null hypothesis and conclude that there is a relationship between median household income and per capita traffic fines revenue.

- With respect to whether or not it is an election year, the probability of observing a relationship as strong as the one in the data if the null hypothesis is true is \(0.842\). Since this is greater than 0.05, we fail to reject the null hypothesis and cannot conclude that there is a relationship between whether or not it is an election year and per capita traffic fines revenue.

Transform a variable

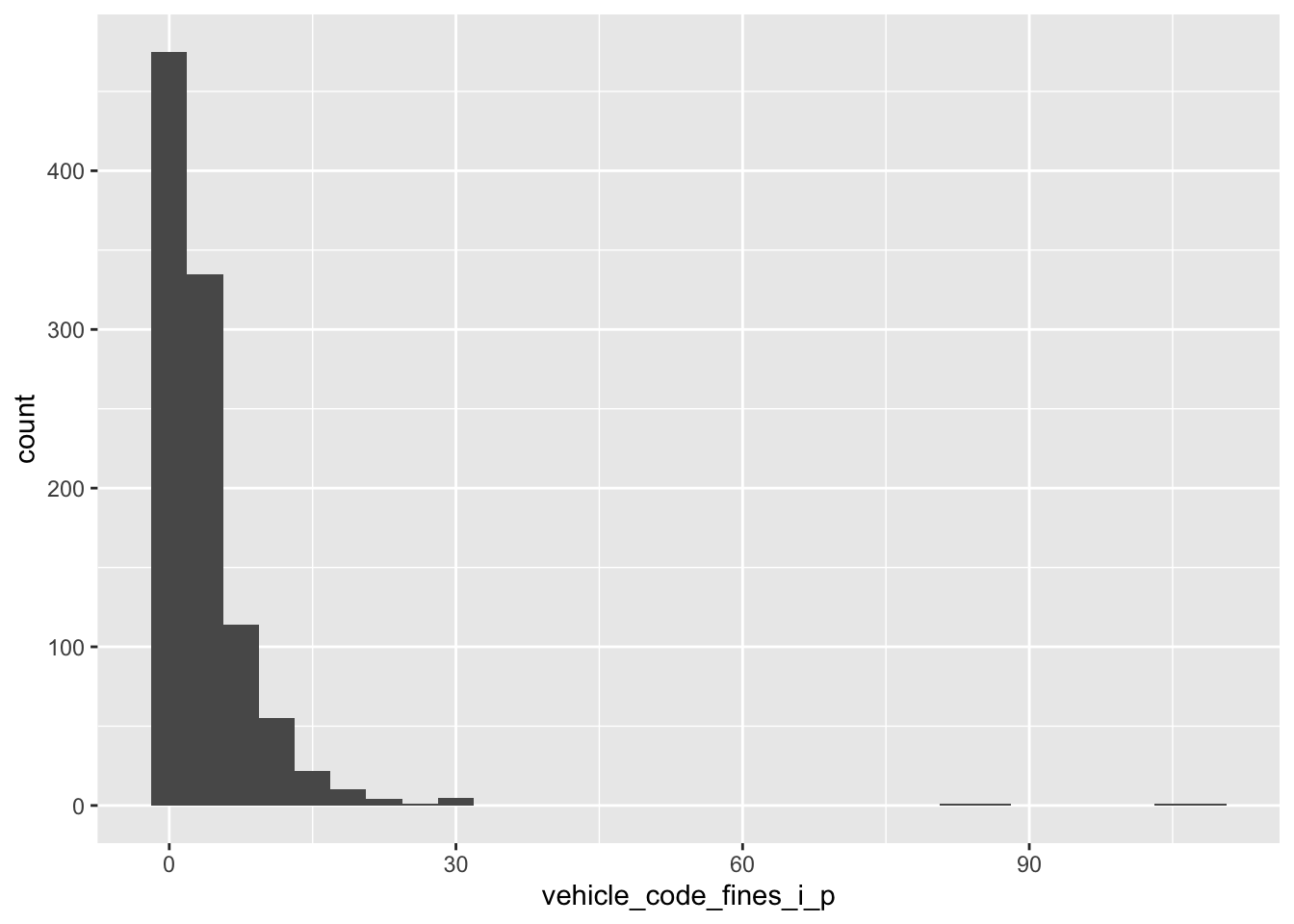

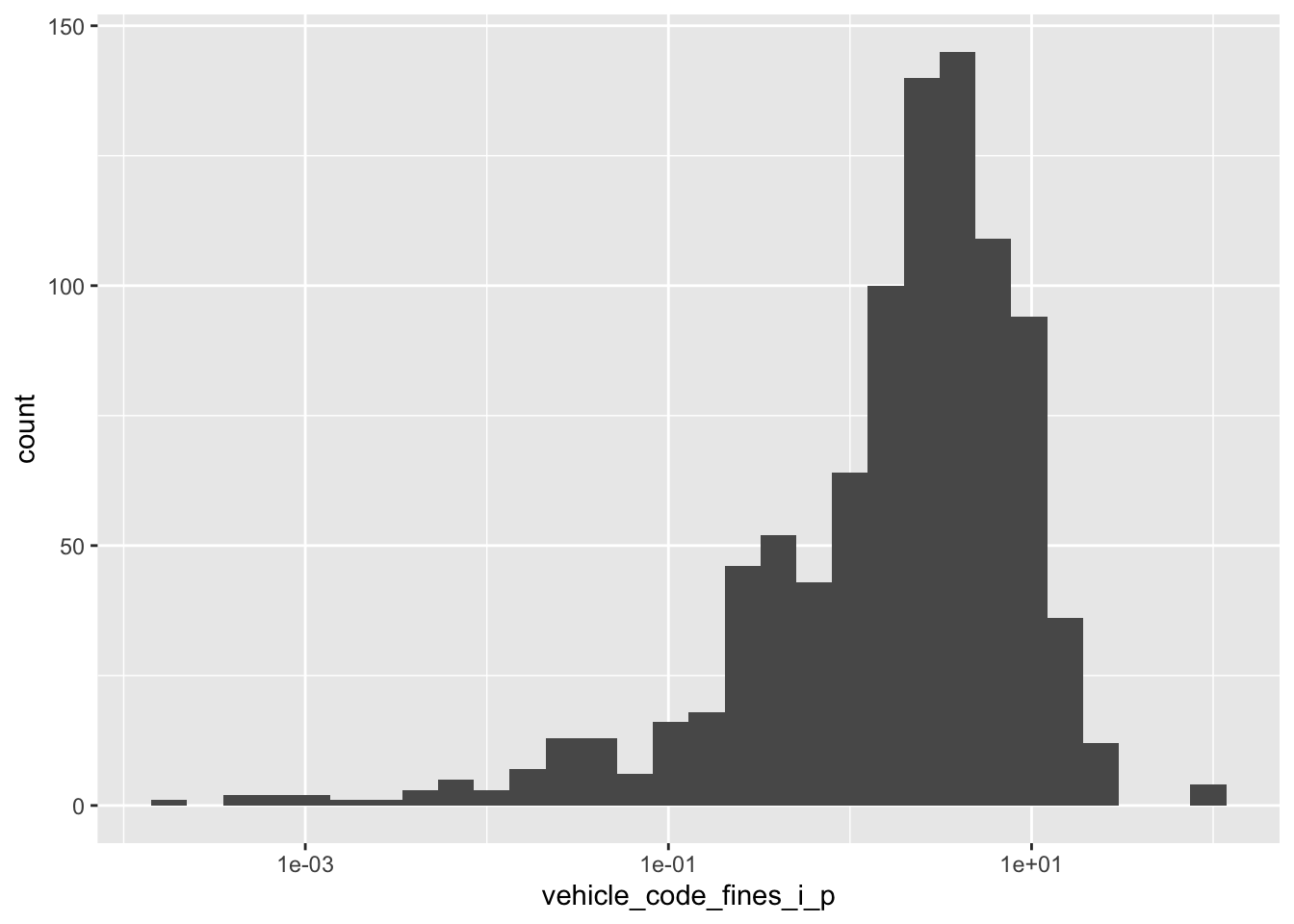

Let’s examine the per capita traffic fines revenue.

- Your turn: Run the code chunk below and create two separate plots. How are the two plots different than each other? Which plot’s better depicts an unskewed variable?

# Plot A

ggplot(

data = traffic_fines,

mapping = aes(x = vehicle_code_fines_i_p)

) +

geom_histogram()`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.# Plot B

ggplot(

data = traffic_fines,

mapping = aes(x = vehicle_code_fines_i_p)

) +

geom_histogram() +

scale_x_log10()Warning in scale_x_log10(): log-10 transformation introduced infinite values.`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.Warning: Removed 87 rows containing non-finite outside the scale range

(`stat_bin()`).Fit the model

Demo: Fit a linear regression model with the transformed outcome variable.

# calculate natural-log transformed outcome

traffic_fines <- traffic_fines |>

mutate(

log_vehicle_code_fines_i_p = log(vehicle_code_fines_i_p),

# replace -Inf with NA - these were counties with $0 in traffic fine revenue

log_vehicle_code_fines_i_p = if_else(log_vehicle_code_fines_i_p == -Inf, NA, log_vehicle_code_fines_i_p)

)

fines_elec_log_fit <- linear_reg() |>

fit(log_vehicle_code_fines_i_p ~ elec_dummy, data = traffic_fines)

tidy(fines_elec_log_fit)# A tibble: 2 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 0.544 0.0657 8.28 4.34e-16

2 elec_dummyYes -0.117 0.139 -0.845 3.98e- 1Your turn: Interpret the coefficients of the model above.

Intercept: Counties not in an election year are expected to earn, on average, \(e^{0.544} \approx \$1.72\) per capita in traffic fines revenue.

Slope: Counties in an election year are expected to earn, on average, \(100 \times [e^{-0.117} - 1] \approx 11.05%\)% less per capita in traffic fines revenue than those not in an election year.

Conduct a hypothesis test

Demo: Use permutation-based methods to conduct the hypothesis test.

# calculate observed fit

obs_fit <- traffic_fines |>

specify(log_vehicle_code_fines_i_p ~ elec_dummy) |>

fit()Warning: Removed 87 rows containing missing values.# generate permuted null distribution

null_dist <- traffic_fines |>

specify(log_vehicle_code_fines_i_p ~ elec_dummy) |>

hypothesize(null = "independence") |>

generate(reps = 1000, type = "permute") |>

fit()Warning: Removed 87 rows containing missing values.# visualize and calculate p-value

visualize(null_dist) +

shade_p_value(obs_fit, direction = "both")get_p_value(null_dist, obs_fit, direction = "both")# A tibble: 2 × 2

term p_value

<chr> <dbl>

1 elec_dummyYes 0.35

2 intercept 0.35Your turn: Interpret the \(p\)-value in context of the data and the research question. Use a significance level of 5%.

If in fact the true relationship between whether or not it is an election and per capita traffic fines revenue is zero, the probability of observing a relationship as strong as the one in the data is \(0.35\). Since this is greater than 0.05, we fail to reject the null hypothesis and cannot conclude that there is a relationship between whether or not it is an election and per capita traffic fines revenue.

What about other differences?

So far we have only estimated a model with at most two explanatory variables. Yet there are many other variables in the data set that could explain the variation in per capita traffic fines revenue.

Recall that the data set is a panel structure - we observe multiple counties over multiple years. This violates the linear model assumption of independence of the observations. Consider the most obvious example - traffic fines revenue within a single county is likely to be correlated across years (e.g. knowing how much revenue was generated in Los Angeles County this year allows me to make a better guess for next year’s revenue).

Fixed effects

One way we can account for this by estimating a fixed effects model. This model includes a separate coefficient for each county, which allows us to account for the correlation in the data.

Demo: Let’s estimate a model that includes elec_dummy and a fixed effect for each county. We’ll also use the log-transformed outcome of interest.

fines_fe_fit <- linear_reg() |>

fit(log_vehicle_code_fines_i_p ~ elec_dummy + factor(county_name), data = traffic_fines)

tidy(fines_fe_fit)# A tibble: 57 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 0.963 0.214 4.50 7.65e- 6

2 elec_dummyYes -0.0981 0.0708 -1.38 1.67e- 1

3 factor(county_name)Alpine 1.07 0.385 2.77 5.69e- 3

4 factor(county_name)Amador -1.11 0.323 -3.44 6.03e- 4

5 factor(county_name)Butte -0.832 0.458 -1.82 6.95e- 2

6 factor(county_name)Calaveras 0.402 0.302 1.33 1.84e- 1

7 factor(county_name)Colusa 0.0297 0.302 0.0984 9.22e- 1

8 factor(county_name)Contra Costa -0.471 0.302 -1.56 1.19e- 1

9 factor(county_name)Del Norte -5.36 0.370 -14.5 7.97e-43

10 factor(county_name)El Dorado -0.177 0.302 -0.585 5.58e- 1

# ℹ 47 more rowsYour turn: Interpret the coefficient for elec_dummy.

Holding county constant, counties in an election year are expected to earn, on average, \(100 \times [e^{-0.098} - 1] \approx 9.34%\)% less per capita in traffic fines revenue than those not in an election year.

Conduct a hypothesis test

Demo: Use permutation-based methods to conduct the hypothesis test.

# calculate observed fit

obs_fit <- traffic_fines |>

specify(log_vehicle_code_fines_i_p ~ elec_dummy + factor(county_name)) |>

fit()Warning: Removed 87 rows containing missing values.# generate permuted null distribution

null_dist <- traffic_fines |>

specify(log_vehicle_code_fines_i_p ~ elec_dummy + factor(county_name)) |>

hypothesize(null = "independence") |>

generate(reps = 1000, type = "permute") |>

fit()Warning: Removed 87 rows containing missing values.# calculate p-value

get_p_value(null_dist, obs_fit, direction = "both")Warning: Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.Warning: Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.

Please be cautious in reporting a p-value of 0. This result is an approximation

based on the number of `reps` chosen in the `generate()` step.

ℹ See `get_p_value()` (`?infer::get_p_value()`) for more information.# A tibble: 57 × 2

term p_value

<chr> <dbl>

1 elec_dummyYes 0.476

2 factor(county_name)Alpine 0.158

3 factor(county_name)Amador 0.072

4 factor(county_name)Butte 0.298

5 factor(county_name)Calaveras 0.496

6 factor(county_name)Colusa 0.944

7 factor(county_name)Contra Costa 0.402

8 factor(county_name)Del Norte 0

9 factor(county_name)El Dorado 0.756

10 factor(county_name)Fresno 0.762

# ℹ 47 more rowsYour turn: Interpret the results of the hypothesis test in context of the data and the research question. Use a significance level of 5%.

If in fact the true relationship between whether or not it is an election and per capita traffic fines revenue is zero, the probability of observing a relationship as strong as the one in the data is \(0.476\). Since this is greater than 0.05, we fail to reject the null hypothesis and cannot conclude that there is a relationship between whether or not it is an election and per capita traffic fines revenue.

Include everything

Finally, let’s estimate a model that includes all the variables in the data set.

# keep all variables used in original analysis - table 1 column 2

tf_replication <- traffic_fines |>

select(

log_vehicle_code_fines_i_p, elec_dummy, dem_share, otherparty_share,

asian_share, black_share, hispanic_share, other_share,

young_drivers, density, med_inc, unemp, own_source_share,

emp_goods, emp_service, pay_goods_i, pay_service_i, arte_share,

collect_share, cnty_le_sworn_1000p, felony_tot_1000p, misdemeanor_tot_1000p,

county_name,

# separate columns for each county to include the year trend

starts_with("i_trend")

)

fines_all_fit <- linear_reg() |>

# use all remaining columns as predictors

fit(log_vehicle_code_fines_i_p ~ .,

data = tf_replication

)

tidy(fines_all_fit)# A tibble: 134 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 3.13 10.7 0.294 0.769

2 elec_dummyYes -0.0895 0.0505 -1.77 0.0767

3 dem_share -0.0275 0.0128 -2.14 0.0327

4 otherparty_share -0.0285 0.0165 -1.73 0.0836

5 asian_share -0.0298 0.182 -0.164 0.870

6 black_share -1.05 0.346 -3.03 0.00252

7 hispanic_share 0.450 0.0795 5.66 0.0000000215

8 other_share 1.30 0.414 3.14 0.00175

9 young_drivers 0.124 0.0620 2.00 0.0459

10 density 0.000306 0.00448 0.0683 0.946

# ℹ 124 more rowsYour turn: Interpret the coefficient for elec_dummy.

Holding all other variables constant, counties in an election year are expected to earn, on average, \(100 \times [e^{-0.089} - 1] \approx 8.56%\)% less per capita in traffic fines revenue than those not in an election year.

Conduct a hypothesis test

At this point it seems reasonable that we have met the assumptions of the linear model. Let’s conduct a hypothesis test to evaluate the relationship between whether or not it is an election year and per capita traffic fines revenue. Instead of relying on the permutation-based approach, we will use the standard \(t\)-test that was reported when we estimated the model above.

Your turn: Interpret the results of the hypothesis test in context of the data and the research question. Use a significance level of 5%.

If in fact the true relationship between whether or not it is an election and per capita traffic fines revenue is zero, the probability of observing a relationship as strong as the one in the data is \(0.0767\). Since this is greater than 0.05, we fail to reject the null hypothesis and cannot conclude that there is a relationship between whether or not it is an election and per capita traffic fines revenue.

Comparing across models

Let’s compare the models we estimated today. Remember that we are interested in the model simplest best model.

glance(fines_elec_dummy_fit)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.000000968 -0.000977 7.23 0.000990 0.975 1 -3481. 6969. 6983.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>glance(fines_inc_elec_fit)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.0167 0.0148 7.17 8.67 0.000184 2 -3473. 6953. 6973.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>glance(fines_elec_log_fit)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.000763 -0.000305 1.77 0.715 0.398 1 -1867. 3739. 3754.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>glance(fines_fe_fit)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.754 0.739 0.906 48.3 7.62e-229 56 -1209. 2533. 2814.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>glance(fines_all_fit)# A tibble: 1 × 12

r.squared adj.r.squared sigma statistic p.value df logLik AIC BIC

<dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

1 0.896 0.878 0.610 49.5 4.38e-297 132 -750. 1769. 2411.

# ℹ 3 more variables: deviance <dbl>, df.residual <int>, nobs <int>Your turn: Which model do you believe is most appropriate? Why?

The model that includes all variables is the most appropriate. The fixed-effects model is potentially a good choice with a high adjusted-\(R^2\) value, but the model that also includes the control variables has an adjusted-\(R^2\) value nearly 14 points higher. In this situation, the additional complexity of the model is outweighed by the improvement in performance.

sessioninfo::session_info()─ Session info ───────────────────────────────────────────────────────────────

setting value

version R version 4.4.2 (2024-10-31)

os macOS Sonoma 14.6.1

system aarch64, darwin20

ui X11

language (EN)

collate en_US.UTF-8

ctype en_US.UTF-8

tz America/New_York

date 2025-03-28

pandoc 3.4 @ /usr/local/bin/ (via rmarkdown)

─ Packages ───────────────────────────────────────────────────────────────────

package * version date (UTC) lib source

archive 1.1.9 2024-09-12 [1] CRAN (R 4.4.1)

backports 1.5.0 2024-05-23 [1] CRAN (R 4.4.0)

bit 4.0.5 2022-11-15 [1] CRAN (R 4.3.0)

bit64 4.0.5 2020-08-30 [1] CRAN (R 4.3.0)

broom * 1.0.6 2024-05-17 [1] CRAN (R 4.4.0)

class 7.3-22 2023-05-03 [1] CRAN (R 4.4.2)

cli 3.6.3 2024-06-21 [1] CRAN (R 4.4.0)

codetools 0.2-20 2024-03-31 [1] CRAN (R 4.4.2)

crayon 1.5.3 2024-06-20 [1] CRAN (R 4.4.0)

data.table 1.15.4 2024-03-30 [1] CRAN (R 4.3.1)

dials * 1.3.0 2024-07-30 [1] CRAN (R 4.4.0)

DiceDesign 1.10 2023-12-07 [1] CRAN (R 4.3.1)

dichromat 2.0-0.1 2022-05-02 [1] CRAN (R 4.3.0)

digest 0.6.37 2024-08-19 [1] CRAN (R 4.4.1)

dplyr * 1.1.4 2023-11-17 [1] CRAN (R 4.3.1)

evaluate 1.0.3 2025-01-10 [1] CRAN (R 4.4.1)

farver 2.1.2 2024-05-13 [1] CRAN (R 4.3.3)

fastmap 1.2.0 2024-05-15 [1] CRAN (R 4.4.0)

forcats * 1.0.0 2023-01-29 [1] CRAN (R 4.3.0)

foreach 1.5.2 2022-02-02 [1] CRAN (R 4.3.0)

furrr 0.3.1 2022-08-15 [1] CRAN (R 4.3.0)

future 1.33.2 2024-03-26 [1] CRAN (R 4.3.1)

future.apply 1.11.2 2024-03-28 [1] CRAN (R 4.3.1)

generics 0.1.3 2022-07-05 [1] CRAN (R 4.3.0)

ggplot2 * 3.5.1 2024-04-23 [1] CRAN (R 4.3.1)

globals 0.16.3 2024-03-08 [1] CRAN (R 4.3.1)

glue 1.8.0 2024-09-30 [1] CRAN (R 4.4.1)

gower 1.0.1 2022-12-22 [1] CRAN (R 4.3.0)

GPfit 1.0-8 2019-02-08 [1] CRAN (R 4.3.0)

gtable 0.3.6 2024-10-25 [1] CRAN (R 4.4.1)

hardhat 1.4.0 2024-06-02 [1] CRAN (R 4.4.0)

here 1.0.1 2020-12-13 [1] CRAN (R 4.3.0)

hms 1.1.3 2023-03-21 [1] CRAN (R 4.3.0)

htmltools 0.5.8.1 2024-04-04 [1] CRAN (R 4.3.1)

htmlwidgets 1.6.4 2023-12-06 [1] CRAN (R 4.3.1)

infer * 1.0.7 2024-03-25 [1] CRAN (R 4.3.1)

ipred 0.9-14 2023-03-09 [1] CRAN (R 4.3.0)

iterators 1.0.14 2022-02-05 [1] CRAN (R 4.3.0)

jsonlite 1.8.9 2024-09-20 [1] CRAN (R 4.4.1)

knitr 1.49 2024-11-08 [1] CRAN (R 4.4.1)

labeling 0.4.3 2023-08-29 [1] CRAN (R 4.3.0)

lattice 0.22-6 2024-03-20 [1] CRAN (R 4.4.2)

lava 1.8.0 2024-03-05 [1] CRAN (R 4.3.1)

lhs 1.1.6 2022-12-17 [1] CRAN (R 4.3.0)

lifecycle 1.0.4 2023-11-07 [1] CRAN (R 4.3.1)

listenv 0.9.1 2024-01-29 [1] CRAN (R 4.3.1)

lubridate * 1.9.3 2023-09-27 [1] CRAN (R 4.3.1)

magrittr 2.0.3 2022-03-30 [1] CRAN (R 4.3.0)

MASS 7.3-61 2024-06-13 [1] CRAN (R 4.4.2)

Matrix 1.7-1 2024-10-18 [1] CRAN (R 4.4.2)

modeldata * 1.4.0 2024-06-19 [1] CRAN (R 4.4.0)

nnet 7.3-19 2023-05-03 [1] CRAN (R 4.4.2)

parallelly 1.37.1 2024-02-29 [1] CRAN (R 4.3.1)

parsnip * 1.2.1 2024-03-22 [1] CRAN (R 4.3.1)

patchwork 1.2.0 2024-01-08 [1] CRAN (R 4.3.1)

pillar 1.10.1 2025-01-07 [1] CRAN (R 4.4.1)

pkgconfig 2.0.3 2019-09-22 [1] CRAN (R 4.3.0)

prodlim 2023.08.28 2023-08-28 [1] CRAN (R 4.3.0)

purrr * 1.0.2 2023-08-10 [1] CRAN (R 4.3.0)

R6 2.5.1 2021-08-19 [1] CRAN (R 4.3.0)

RColorBrewer 1.1-3 2022-04-03 [1] CRAN (R 4.3.0)

Rcpp 1.0.14 2025-01-12 [1] CRAN (R 4.4.1)

readr * 2.1.5 2024-01-10 [1] CRAN (R 4.3.1)

recipes * 1.0.10 2024-02-18 [1] CRAN (R 4.3.1)

rlang 1.1.5 2025-01-17 [1] CRAN (R 4.4.1)

rmarkdown 2.29 2024-11-04 [1] CRAN (R 4.4.1)

rpart 4.1.23 2023-12-05 [1] CRAN (R 4.4.2)

rprojroot 2.0.4 2023-11-05 [1] CRAN (R 4.3.1)

rsample * 1.2.1 2024-03-25 [1] CRAN (R 4.3.1)

rstudioapi 0.17.0 2024-10-16 [1] CRAN (R 4.4.1)

scales * 1.3.0.9000 2025-03-19 [1] Github (bensoltoff/scales@71d8f13)

sessioninfo 1.2.2 2021-12-06 [1] CRAN (R 4.3.0)

stringi 1.8.4 2024-05-06 [1] CRAN (R 4.3.1)

stringr * 1.5.1 2023-11-14 [1] CRAN (R 4.3.1)

survival 3.7-0 2024-06-05 [1] CRAN (R 4.4.2)

tibble * 3.2.1 2023-03-20 [1] CRAN (R 4.3.0)

tidymodels * 1.2.0 2024-03-25 [1] CRAN (R 4.3.1)

tidyr * 1.3.1 2024-01-24 [1] CRAN (R 4.3.1)

tidyselect 1.2.1 2024-03-11 [1] CRAN (R 4.3.1)

tidyverse * 2.0.0 2023-02-22 [1] CRAN (R 4.3.0)

timechange 0.3.0 2024-01-18 [1] CRAN (R 4.3.1)

timeDate 4032.109 2023-12-14 [1] CRAN (R 4.3.1)

tune * 1.2.1 2024-04-18 [1] CRAN (R 4.3.1)

tzdb 0.4.0 2023-05-12 [1] CRAN (R 4.3.0)

utf8 1.2.4 2023-10-22 [1] CRAN (R 4.3.1)

vctrs 0.6.5 2023-12-01 [1] CRAN (R 4.3.1)

vroom 1.6.5 2023-12-05 [1] CRAN (R 4.3.1)

withr 3.0.2 2024-10-28 [1] CRAN (R 4.4.1)

workflows * 1.1.4 2024-02-19 [1] CRAN (R 4.4.0)

workflowsets * 1.1.0 2024-03-21 [1] CRAN (R 4.3.1)

xfun 0.50.5 2025-01-15 [1] https://yihui.r-universe.dev (R 4.4.2)

yaml 2.3.10 2024-07-26 [1] CRAN (R 4.4.0)

yardstick * 1.3.1 2024-03-21 [1] CRAN (R 4.3.1)

[1] /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/library

──────────────────────────────────────────────────────────────────────────────