HW 09 - Predicting attitudes towards marijuana

This homework is due April 30 at 11:59pm ET.

Learning objectives

- Implement resampling methods for machine learning

- Estimate machine learning models for classification

- Evaluate model performance using appropriate metrics

- Utilize feature engineering and hyperparameter tuning to improve model performance

Getting started

Go to the info2951-sp25 organization on GitHub. Click on the repo with the prefix hw-09. It contains the starter documents you need to complete the homework.

Clone the repo and start a new project in RStudio. See the Lab 0 instructions for details on cloning a repo and starting a new R project.

General guidance

As we’ve discussed in lecture, your plots should include an informative title, axes should be labeled, and careful consideration should be given to aesthetic choices.

Remember that continuing to develop a sound workflow for reproducible data analysis is important as you complete the lab and other assignments in this course. There will be periodic reminders in this assignment to remind you to render, commit, and push your changes to GitHub. You should have at least 3 commits with meaningful commit messages by the end of the assignment.

Make sure to

- Update author name on your document.

- Label all code chunks informatively and concisely.

- Follow the Tidyverse code style guidelines.

- Make at least 3 commits.

- Resize figures where needed, avoid tiny or huge plots.

- Turn in an organized, well formatted document.

They take a long time to complete. If you wait until the last minute to render your Quarto document, you will likely run out of time to submit it — especially if you wait until the slip day deadline. I highly recommend making use of code caching to store cached contents of your model code chunks so they don’t have to unnecessarily run on every render. Refer back to the slides for setting up chunk dependencies.

Data and packages

We’ll use the {tidyverse} and {tidymodels} packages for this assignment.

The General Social Survey is a biannual survey of the American public.

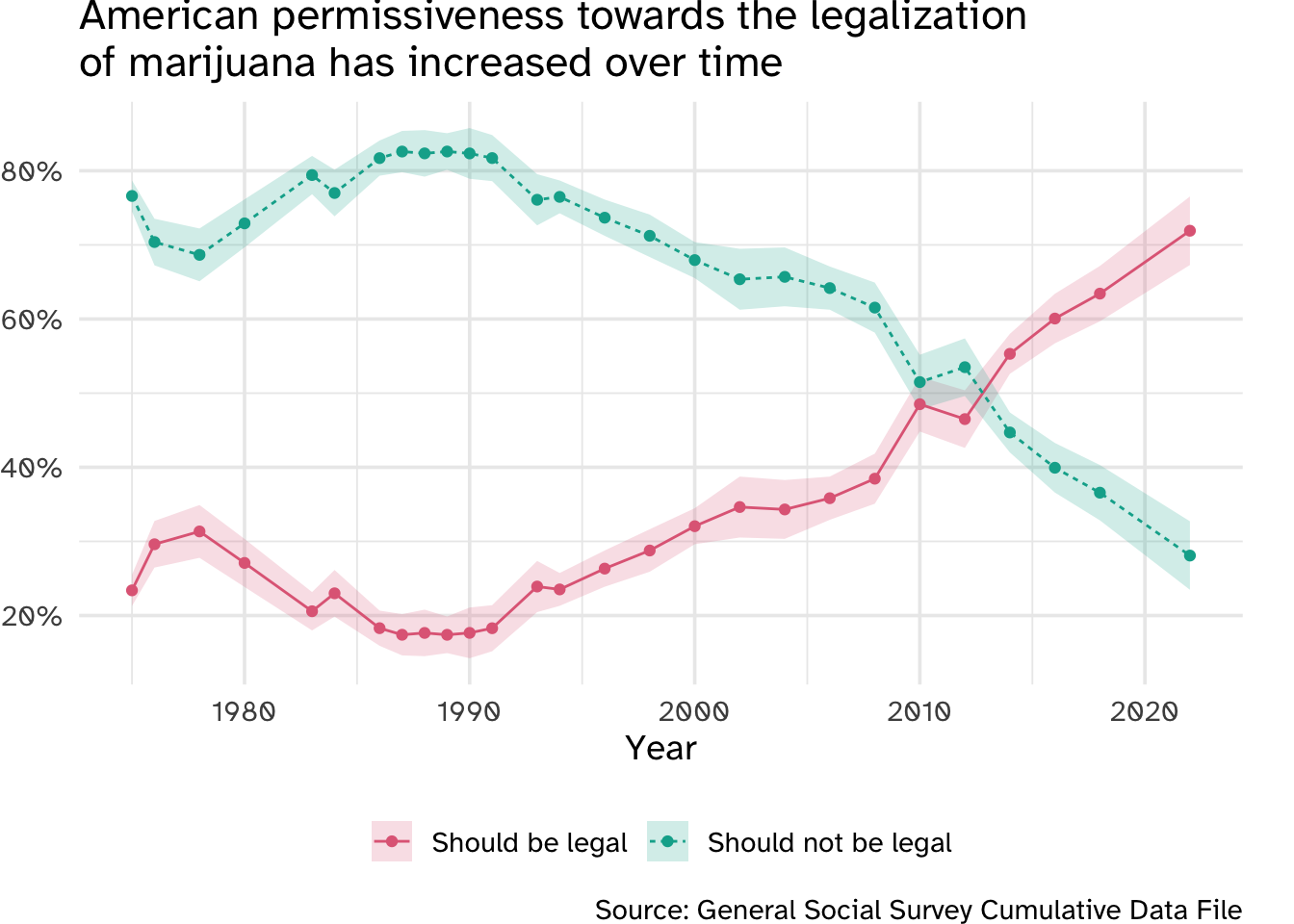

Over the past twenty years, American attitudes towards marijuana have softened extensively. In the early 2010s, the number of Americans who believed marijuana should be legal began to outnumber those who thought it should not be legal.

data/gss.rds contains a selection of variables from the 2022 GSS. The outcome of interest grass is a factor variable coded as either "should be legal" (respondent believes marijuana should be legal) or "should not be legal" (respondent believes marijuana should not be legal).

| Name | gss |

| Number of rows | 3319 |

| Number of columns | 25 |

| _______________________ | |

| Column type frequency: | |

| factor | 22 |

| numeric | 3 |

| ________________________ | |

| Group variables | None |

Variable type: factor

| skim_variable | n_missing | complete_rate | ordered | n_unique | top_counts |

|---|---|---|---|---|---|

| colath | 1180 | 0.64 | FALSE | 2 | yes: 1428, not: 711 |

| colmslm | 1184 | 0.64 | FALSE | 2 | not: 1446, yes: 689 |

| degree | 1 | 1.00 | TRUE | 5 | hig: 1554, bac: 689, gra: 475, les: 323 |

| fear | 1151 | 0.65 | FALSE | 2 | no: 1327, yes: 841 |

| grass | 2425 | 0.27 | FALSE | 2 | sho: 636, sho: 258 |

| gunlaw | 1169 | 0.65 | FALSE | 2 | fav: 1571, opp: 579 |

| happy | 21 | 0.99 | TRUE | 3 | pre: 1830, not: 767, ver: 701 |

| health | 6 | 1.00 | FALSE | 4 | goo: 1742, fai: 801, exc: 618, poo: 152 |

| hispanic | 32 | 0.99 | FALSE | 22 | not: 2674, mex: 329, pue: 75, cub: 44 |

| income16 | 394 | 0.88 | TRUE | 26 | $17: 320, $60: 294, $90: 239, $75: 237 |

| letdie1 | 2226 | 0.33 | FALSE | 2 | yes: 811, no: 282 |

| owngun | 1159 | 0.65 | FALSE | 3 | no: 1425, yes: 700, ref: 35 |

| partyid | 29 | 0.99 | TRUE | 8 | ind: 780, str: 569, not: 471, ind: 384 |

| polviews | 108 | 0.97 | TRUE | 7 | mod: 1211, con: 449, lib: 445, sli: 405 |

| pray | 37 | 0.99 | FALSE | 6 | sev: 955, nev: 675, onc: 634, les: 434 |

| pres20 | 1073 | 0.68 | FALSE | 4 | bid: 1386, tru: 770, oth: 74, did: 16 |

| race | 50 | 0.98 | FALSE | 3 | whi: 2114, bla: 626, oth: 529 |

| region | 0 | 1.00 | FALSE | 9 | sou: 736, eas: 559, pac: 553, wes: 356 |

| sex | 18 | 0.99 | FALSE | 2 | fem: 1772, mal: 1529 |

| sexfreq | 1898 | 0.43 | TRUE | 7 | not: 430, 2 o: 210, 2 o: 206, abo: 195 |

| wrkstat | 6 | 1.00 | FALSE | 8 | wor: 1514, ret: 678, wor: 340, kee: 243 |

| zodiac | 266 | 0.92 | FALSE | 12 | lib: 281, pis: 280, gem: 276, leo: 272 |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| id | 0 | 1.00 | 2078.87 | 1200.51 | 1 | 1036 | 2080 | 3122 | 4150 | ▇▇▇▇▇ |

| age | 207 | 0.94 | 48.28 | 17.72 | 18 | 33 | 47 | 63 | 89 | ▇▇▇▆▂ |

| hrs1 | 1470 | 0.56 | 39.76 | 14.13 | 0 | 36 | 40 | 45 | 89 | ▁▂▇▁▁ |

You can find the documentation for each of the available variables using the GSS Data Explorer. Just search by the column name to find the associated description.

Exercises

Exercise 1

Selecting potential features. For each of the variables below, explain whether or not you think they would be useful predictors for grass and why.

degreehappyzodiacid

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 2

Partitioning your data. Reproducibly split your data into training and test sets. Allocate 75% of observations to training, and 25% to testing. Partition the training set into 10 distinct folds for model fitting. Unless otherwise stated, you will use these sets for all the remaining exercises.

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 3

Fit a null model. To establish a baseline for evaluating model performance, we want to estimate a null model. This is a model with zero predictors. In the absence of predictors, our best guess for a classification model is to predict the modal outcome for all observations (e.g. if a majority of respondents in the training set believe marijuana should be legal, then we would predict that outcome for every respondent).

The {parsnip} package includes a model specification for the null model. Fit the null model using the cross-validated folds. Report the accuracy, ROC AUC values, and confusion matrix for this model. How does the null model perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 4

Fit a basic logistic regression model. Estimate a simple logistic regression model to predict grass as a function of age, degree, happy, partyid, and sex. Fit the model using the cross-validated folds without any explicit feature engineering.

Report the accuracy, ROC AUC values, and confusion matrix for this model. How does this model perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 5

Fit a basic random forest model. Estimate a random forest model to predict grass as a function of all the other variables in the dataset (except id). In order to do this, you need to impute missing values for all the predictor columns. This means replacing missing values (NA) with plausible values given what we know about the other observations.

To do this you should build a feature engineering recipe that does the following:

- Omits the

idcolumn as a predictor - Remove rows with an

NAforgrass- we want to omit observations with missing values for outcomes, not impute them - Use median imputation for numeric predictors

- Use modal imputation for nominal predictors

- Downsample the outcome of interest to have an equal number of observations for each level

Fit the model using the cross-validated folds and the ranger engine, and report the accuracy, ROC AUC values, and confusion matrix for this model. How does this model perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 6

Fit a nearest neighbors model. Estimate a nearest neighbors model to predict grass as a function of all the other variables in the dataset (except id). Use {recipes} to pre-process the data as necessary to train a nearest neighbors model. Be sure to also perform the same pre-processing as for the random forest model (e.g. omitting NA outcomes, imputation). Make sure your step order is correct for the recipe.

To determine the optimal number of neighbors, tune over at least 10 possible values.

Tune the model using the cross-validated folds and report the ROC AUC values for the five best models. How do these models perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 7

Fit a penalized logistic regression model. Estimate a penalized logistic regression model to predict grass. Use the same feature engineering recipe as for the \(5\)-nearest neighbors model.

Tune the model over its two hyperparameters: penalty and mixture. Create a data frame containing combinations of values for each of these parameters. penalty should be tested at the values 10^seq(-6, -1, length.out = 20), while mixture should be tested at values c(0, 0.2, 0.4, 0.6, 0.8, 1).

Tune the model using the cross-validated folds and the glmnet engine, and report the ROC AUC values for the five best models. Use autoplot() to inspect the performance of the models. How do these models perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 8

Tune the random forest model. Revisit the random forest model used previously. This time, implement hyperparameter tuning over the mtry and min_n to find the optimal settings. Use at least ten combinations of hyperparameter values. Report the best five combinations of values and their ROC AUC values. How do these models perform?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Exercise 9

Pick the best performing model. Select the best performing model. Train that recipe + model using the full training set and report the accuracy, ROC AUC, and confusion matrix using the held-out test set of data. Visualize the ROC curve. How would you describe this model’s performance at predicting attitudes towards the legalization of marijuana?

Now is a good time to render, commit (with a descriptive and concise commit message), and push again. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Bonus (optional) - Battle Royale

For those looking for a challenge (and a slight amount of extra credit for this assignment), train a high-performing model to predict grass. You must use {tidymodels} to train this model.

To evaluate your model’s effectiveness, you will generate predictions for a held-back secret test set of respondents from the survey. These can be found in data/gss-test.rds. The data frame has an identical structure to gss.rds, however I have not included the grass column. You will have no way of judging the effectiveness of your model on the test set itself.

To evaluate your model’s performance, you must create a CSV file that contains your predicted probabilities for grass. This CSV should have three columns: id (the id value for the respondent), .pred_should be legal, and .pred_should not be legal. You can generate this CSV file using the code below:

augment(best_fit, new_data = gss_secret_test) |>

select(id, starts_with(".pred")) |>

write_csv(file = "data/gss-preds.csv")where gss_secret_test is a data frame imported from data/gss-test.rds and best_fit is the final model fitted using the entire training set.

Your CSV file must

- Be structured exactly as I specified above.

- Be stored in the

datafolder and named"gss-preds.csv".

If it does not meet these requirements, then you are not eligible to win this challenge.

The three students with the highest ROC AUC as calculated using their secret test set predictions will earn an extra (uncapped) 10 points on this homework assignment. For instance, if a student earned 45/50 points on the other components and was in the top-three, they would earn a 55/50 for this homework assignment.

Render, commit, and push one last time. Make sure that you commit and push all changed documents and your Git pane is completely empty before proceeding.

Wrap up

Submission

- Go to http://www.gradescope.com and click Log in in the top right corner.

- Click School Credentials \(\rightarrow\) Cornell University NetID and log in using your NetID credentials.

- Click on your INFO 2951 course.

- Click on the assignment, and you’ll be prompted to submit it.

- Mark all the pages associated with exercise. All the pages of your homework should be associated with at least one question (i.e., should be “checked”).

- Select all pages of your .pdf submission to be associated with the “Workflow & formatting” question.

Grading

- Exercise 1: 2 points

- Exercise 2: 2 points

- Exercise 3: 4 points

- Exercise 4: 4 points

- Exercise 5: 8 points

- Exercise 6: 8 points

- Exercise 7: 8 points

- Exercise 8: 6 points

- Exercise 9: 4 points

- Bonus: 0 points (extra credit)

- Workflow + formatting: 4 points

- Total: 50 points

The “Workflow & formatting” component assesses the reproducible workflow. This includes:

- Following {tidyverse} code style

- All code being visible in rendered PDF without automatic wrapping (no more than 80 characters)

- Appropriate figure sizing, and figures with informative labels and legends

- Ensuring reproducibility by setting a random seed value.